4 Ways to Write Data To Parquet With Python: A Comparison | by Antonello Benedetto | Towards Data Science

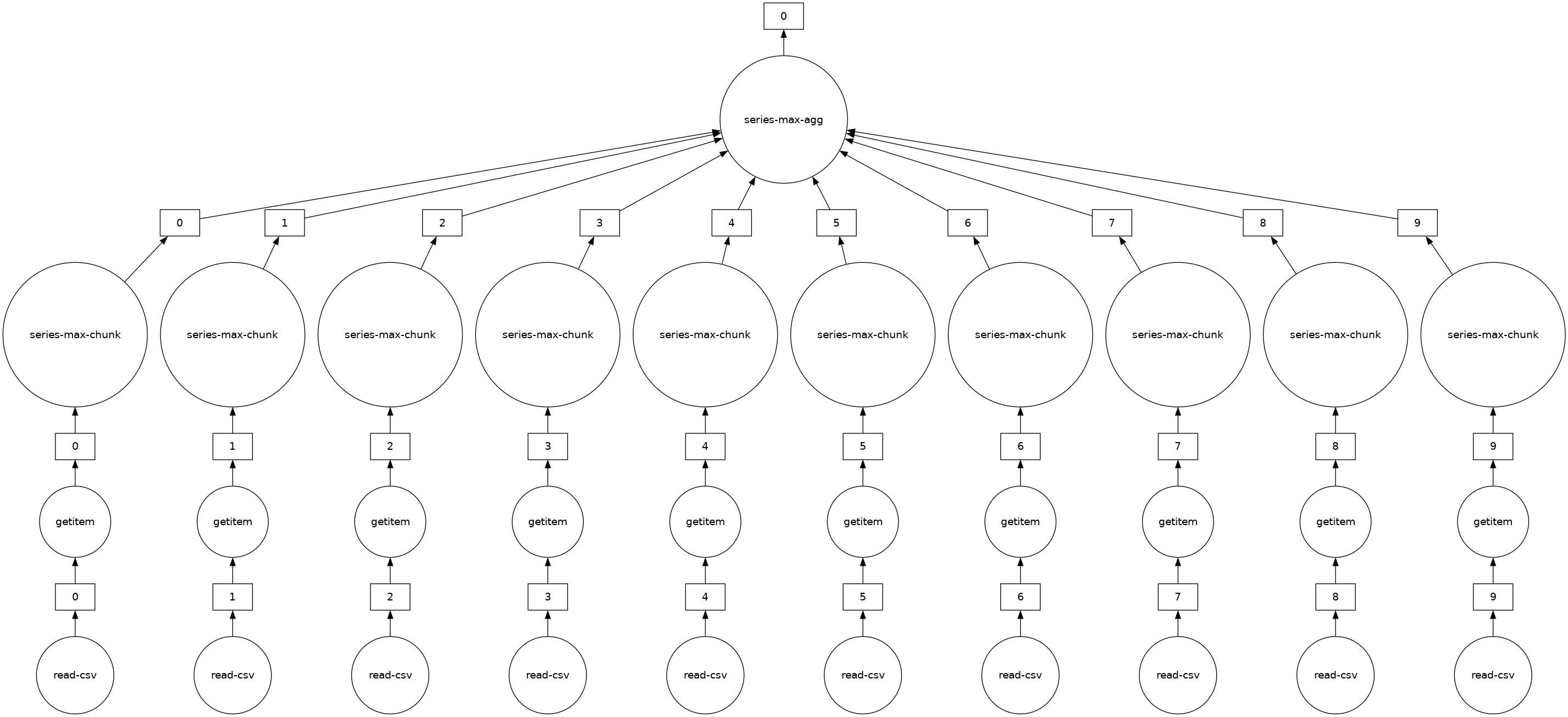

Converting Huge CSV Files to Parquet with Dask, DuckDB, Polars, Pandas. | by Mariusz Kujawski | Medium

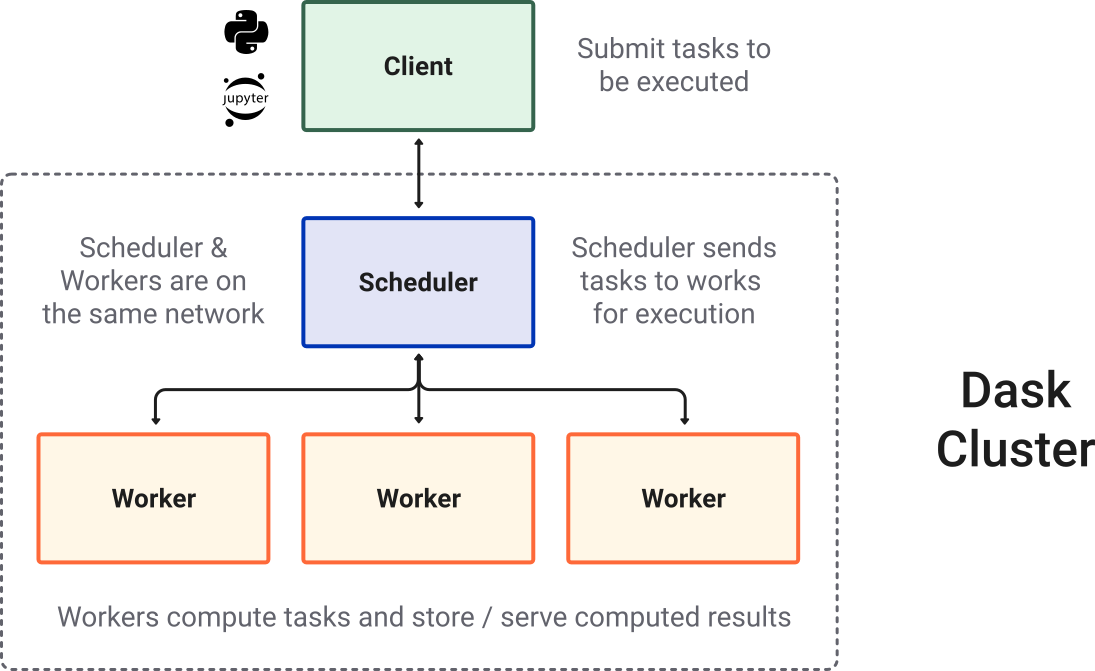

Run Heavy Prefect Workflows at Lightning Speed with Dask | by Richard Pelgrim | Towards Data Science

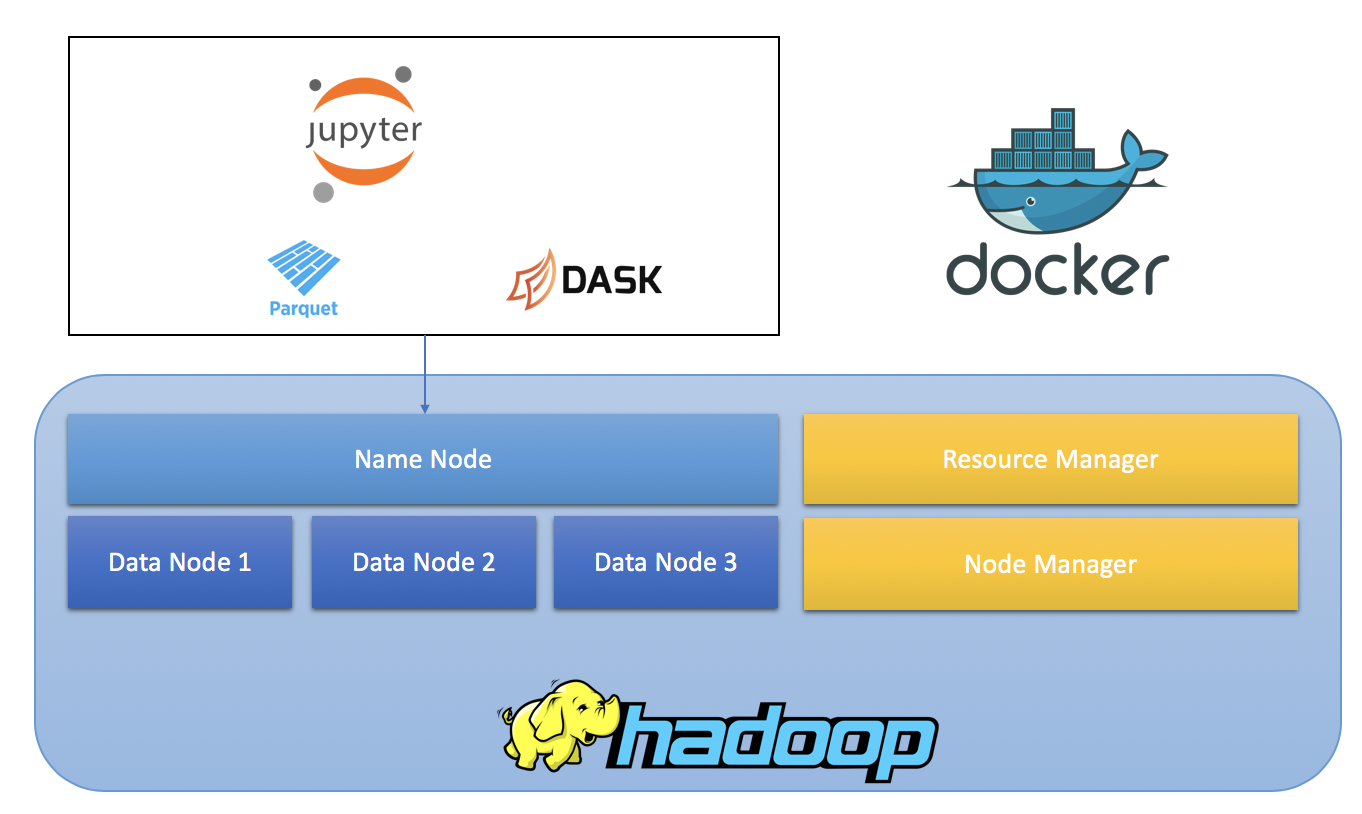

Converting Huge CSV Files to Parquet with Dask, DuckDB, Polars, Pandas. | by Mariusz Kujawski | Medium

Writing to parquet with `.set_index("col", drop=False)` yields: `ValueError(f"cannot insert {column}, already exists")` · Issue #9328 · dask /dask · GitHub